Introduction to Generative AI and Cybersecurity

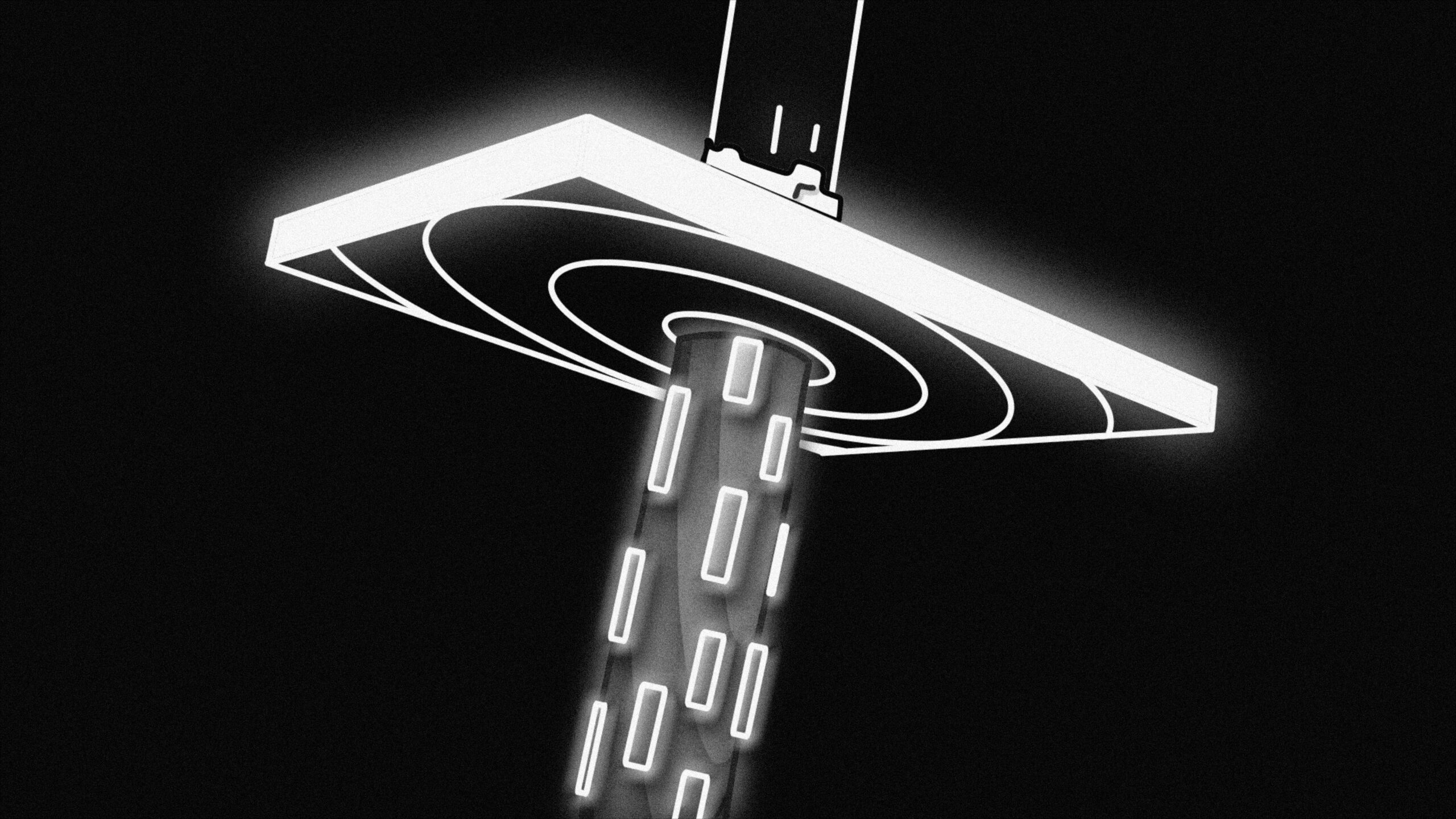

Generative AI, a subset of artificial intelligence, stands out for its ability to create data rather than merely analyze it. Employing complex algorithms and deep learning models, generative AI can produce realistic images, coherent text, and even synthesize voice and video content. Initially celebrated for its revolutionary creative potentials, it has now delved into the realm of cybersecurity, marking the beginning of a transformative era in digital defense.

The convergence of generative AI and cybersecurity is not merely coincidental but a necessary evolution driven by the increasing sophistication of cyber threats. Cybersecurity professionals are continuously seeking advanced tools to preempt, detect, and neutralize attacks, and the novel capabilities of generative AI present a promising solution. This interdependence is manifested in various cybersecurity protocols that are becoming progressively reliant on AI technologies to enhance their effectiveness.

At its core, generative AI operates on the principles of machine learning and neural networks. The technology involves training models on vast datasets, allowing the AI to learn underlying patterns and generate new content that mirrors the data it was trained on. This AI can be used to craft numerous outputs like defensive strategies, anomaly detection algorithms, and predictive models that anticipate potential security breaches.

The role of generative AI in cybersecurity extends beyond passive monitoring; it actively enhances security measures by creating sophisticated simulations of potential threats and countermeasures. This capability allows cybersecurity teams to stress-test their systems and develop more resilient defenses against increasingly complex cyberattacks. While the benefits are substantial, it is imperative to understand that the same technology can also be weaponized, posing significant risks that must be managed strategically.

As we delve deeper into the intricacies of generative AI applications and their implications in subsequent sections, it becomes clear that this technological alliance holds profound implications for the future of cybersecurity. Understanding the fundamentals of how generative AI operates and its emerging role in digital defense sets the stage for exploring its multifaceted impacts comprehensively.

Enhancing Security Protocols with Generative AI

As cyber threats continue to evolve, so too must our defense mechanisms. Generative AI is playing a pivotal role in this transformation by enhancing security protocols across various domains. One primary application is AI-driven threat detection. By leveraging sophisticated algorithms, generative AI can identify malicious activities in real-time. This allows cybersecurity teams to respond promptly and effectively. For instance, generative models can be trained to recognize patterns associated with known threats, leading to early detection and mitigation.

Anomaly detection is another vital area where generative AI proves invaluable. Traditional systems often rely on predefined rules to flag unusual behavior. However, these can be limited and unable to adapt to new, emerging threats. Generative AI, by contrast, can analyze vast amounts of data to uncover subtle deviations that may indicate a breach. By continuously learning and adapting, these systems improve their detection capabilities over time, staying one step ahead of cybercriminals.

Automated incident response is another significant advance. When a threat is identified, generative AI can help orchestrate a swift and coordinated response. For example, AI can automatically isolate affected systems, trigger alerts, and even execute predefined protocols to mitigate damage. By reducing the time gap between detection and response, generative AI minimizes the potential impact of cyber incidents.

Predictive analytics powered by generative AI offer foresight unmatched by traditional methods. By analyzing historical data and current threat landscapes, these systems can forecast potential vulnerabilities and threat vectors. Organizations can then proactively bolster their defenses, addressing weaknesses before they are exploited. A notable case study involves the healthcare sector, where AI-driven predictive analytics have been used to shield sensitive patient data from targeted attacks.

In real-world applications, companies like IBM and Google’s DeepMind have demonstrated the efficacy of generative AI in cybersecurity. IBM’s Watson, for example, has been applied to identify and counter advanced persistent threats, while DeepMind’s AI has been instrumental in pioneering adaptive security measures that learn and evolve alongside emerging threats.

The integration of generative AI in cybersecurity protocols not only enhances the overall security posture but also provides a dynamic and adaptive approach to combating modern cyber threats.

AI-Driven Threat Detection and Mitigation

Generative AI models have become pivotal in the realm of cybersecurity, particularly in enhancing threat detection and mitigation. These models leverage vast computational power to process immense volumes of data, identifying potential cyber threats in real time. By analyzing patterns, anomalies, and behavioral indicators that might be missed by traditional methods, AI-driven systems significantly heighten the accuracy and speed of detecting threats.

One of the key strengths of generative AI lies in its ability to learn and adapt. By continually ingesting new data, these models evolve, improving their ability to detect sophisticated threats such as advanced malware, phishing attempts, and even zero-day exploits. Through techniques like deep learning, AI can discern subtle deviations from the norm, distinguishing between benign and malicious activities with heightened precision.

Examples of this can be seen in the identification of malware where AI systems can scrutinize code in real-time for characteristics indicative of malicious intent. In the case of phishing, AI models can evaluate email content, URLs, and sender legitimacy at unprecedented speeds, thereby halting potential breaches before they impact organizational networks.

Several advancements in AI algorithms have notably bolstered threat detection capabilities. Techniques such as reinforcement learning and neural networks enable the creation of more sophisticated detection patterns and models. Additionally, the incorporation of unsupervised learning allows AIs to uncover previously unseen threat vectors, contributing to a more comprehensive cybersecurity posture.

Generative AI’s role in threat mitigation doesn’t end with detection. Once a threat is identified, AI systems can execute swift countermeasures. Automated responses, such as isolating affected systems or neutralizing the threat before it propagates, exemplify the proactive steps AI-enabled cybersecurity frameworks can undertake.

Ultimately, the integration of generative AI in cybersecurity represents a significant leap forward, marrying advanced data processing with adaptive learning models to foster a dynamic, resilient defense against the evolving landscape of cyber threats.

Predictive Analytics for Preemptive Security

Predictive analytics, driven by the capabilities of generative AI, has become a crucial component in the forefront of cybersecurity strategies. This innovative approach allows cybersecurity teams to anticipate and counteract potential threats before they evolve into actual attacks. At its core, predictive analytics involves the meticulous collection and examination of massive datasets, which machine learning algorithms use to identify patterns and predict imminent cyber threats. The process begins with the aggregation of data from various sources including network logs, social media, dark web forums, and historical attack data.

Machine learning models, imbued with generative AI, process this data to discover anomalies and emerging patterns that could signify a possible attack. These models continuously learn from the data they analyze, becoming more accurate over time. This continuous refinement is pivotal for predicting new and sophisticated attack vectors that mimic legitimate user behavior yet pose significant security risks. For instance, by recognizing specific types of unsanctioned access or unusual data exfiltration activities, generative AI can provide early warnings, giving organizations a tactical advantage to reinforce their defenses.

Many organizations have already reaped substantial benefits from integrating predictive analytics into their cybersecurity frameworks. Consider the success story of a major financial institution that implemented predictive analytics to safeguard against fraudulent activities. By leveraging generative AI, the institution was able to decrease the incidence of fraudulent transactions by over 30%, preemptively identifying and neutralizing threats before they could cause substantial harm. Similarly, multinational technology companies have adopted predictive analytics to secure intellectual property and confidential information, thus maintaining their competitive edge in the marketplace.

Ultimately, predictive analytics powered by generative AI is transforming the landscape of cybersecurity. This sophisticated method not only enhances traditional security measures but also provides a proactive approach to threat management, ensuring that organizations can stay one step ahead in an ever-evolving digital battleground.

Automation in Incident Response

Generative AI has brought a transformative approach to incident response in cybersecurity, offering unprecedented speed and efficiency. By leveraging sophisticated algorithms, automated systems can rapidly identify and assess threats, effectively reducing the response time—a critical factor in mitigating potential damage. These AI-driven solutions enhance the ability to detect anomalies and flag suspicious activities much faster than traditional methods, enabling organizations to neutralize threats before they escalate.

One of the key benefits of employing generative AI in incident response lies in its ability to handle voluminous data with ease. By continuously monitoring network activities, AI systems can analyze vast datasets to identify patterns that suggest security breaches. Once a threat is detected, the AI can execute predefined response protocols, such as isolating affected systems, alerting relevant personnel, or even neutralizing the threat autonomously. This high level of automation reduces the dependency on human intervention, allowing cybersecurity teams to focus on more strategic tasks.

Generative AI also excels in its adaptability and learning capacity. Through continuous exposure to new threats and response outcomes, these systems improve their algorithms, becoming more adept at recognizing and countering emerging threats. This evolution is crucial in staying ahead of cyber adversaries who continually develop more sophisticated attack techniques. Moreover, AI-driven automation ensures consistent and accurate implementation of response strategies, minimizing the risk of human error during critical incidents.

Nevertheless, reliance on AI for incident response is not without potential drawbacks. One significant concern is the overdependence on technology, which may lead to complacency among cybersecurity practitioners. In addition, AI systems, while highly intelligent, are not infallible and can be susceptible to false positives or even evasion by clever attackers. Striking the right balance between AI automation and human oversight is essential to maintaining an effective cybersecurity posture. Furthermore, ethical considerations and the transparency of AI decision-making processes require ongoing attention to prevent unintended consequences.

Overall, the integration of generative AI in incident response marks a significant advancement in cybersecurity measures. While it offers numerous benefits by automating and streamlining processes, careful implementation and continuous oversight are paramount in ensuring its efficacy and reliability in protecting digital assets.

Potential Risks Introduced by Generative AI

The integration of generative AI into cybersecurity, while beneficial, introduces a spectrum of new risks that necessitate careful consideration and strategic mitigation. One of the most pressing concerns is the rise of adversarial attacks. These are sophisticated strategies where attackers employ generative AI to craft malicious inputs designed to deceive AI-driven security systems. By generating seemingly normal data that can bypass standard detection mechanisms, attackers can penetrate defenses, leading to significant security breaches. The evolving nature of these threats underscores the need for continuous advancement in defensive algorithms and monitoring systems.

Another critical aspect is the ethical and technical challenges posed by managing these risks. The development and application of generative AI must align with robust ethical standards to prevent misuse. For instance, there are concerns about the dual-use nature of AI technologies which can be repurposed maliciously. Technical challenges also abound, particularly in ensuring that AI models can identify and respond to novel attack vectors. This often involves developing sophisticated machine learning models capable of analyzing vast amounts of data in real-time to detect anomalies indicative of a breach.

The management of these risks extends into the operational sphere, where the integration of generative AI necessitates the deployment of cybersecurity professionals well-versed in AI technologies. These experts must continually adapt to new attack methods and update protocols to safeguard against evolving threats. Furthermore, companies must implement stringent AI governance frameworks that include regular audits, transparency in AI decision-making, and compliance with privacy standards.

Ultimately, while generative AI holds the promise of significantly enhancing cybersecurity measures, it simultaneously introduces complex risks that require ongoing diligence, sophisticated technical solutions, and ethical vigilance. By addressing these challenges head-on, organizations can harness the full potential of generative AI, driving innovation while maintaining robust security postures.

Ethical and Governance Considerations

The integration of generative AI into cybersecurity, though promising, stirs a myriad of ethical and governance challenges. One primary concern revolves around privacy. As generative AI systems process vast amounts of data to predict and counteract cyber threats, the potential for inadvertent or deliberate misuse escalates. Ensuring that personal and sensitive information remains confidential and secure is paramount. The necessity for robust data encryption techniques and stringent access controls becomes ever more apparent in this evolving landscape.

Consent also emerges as a critical issue. With generative AI systems, users often remain unaware of the extent to which their data is utilized. Transparent consent mechanisms are imperative to guarantee that individuals are fully informed about how their data is used and safeguarded. This challenge calls for innovation in user-experience design to ensure consent processes are not only comprehensive but also understandable and user-friendly.

The risk of bias in AI algorithms is another significant concern. Algorithms trained on biased datasets can perpetuate existing inequalities and introduce new forms of discrimination. It becomes necessary to integrate bias detection and mitigation strategies within the development stage of these algorithms. Regular audits of AI systems and inclusive practices in data collection can help ensure a fairer and more ethical deployment of AI in cybersecurity.

Additionally, regulatory and policy measures are critical in managing the ethical use of generative AI in cybersecurity. Governments and international bodies need to collaborate on setting clear standards and guidelines that address privacy, consent, and fairness. Policies such as the General Data Protection Regulation (GDPR) in Europe provide a framework that could be adapted and extended to the global context, promoting responsible AI usage.

Strong governance frameworks should be established to oversee the deployment and continual monitoring of generative AI applications in cybersecurity. These frameworks should include stakeholder representation from diverse fields to ensure a holistic approach to ethical considerations, blending the technical with the socio-political aspects.

Future Trends and the Role of Human Oversight

As generative AI continues to evolve, its application in cybersecurity exhibits promising potential and complex challenges. Future trends in this domain are marked by the intensification of AI-driven solutions capable of predictive threat analysis, real-time anomaly detection, and autonomous response mechanisms. These advancements will likely enhance the overall robustness of global security measures by significantly reducing the time and effort required to identify and mitigate cyber threats.

However, with the rise of sophisticated generative AI systems, the landscape of cyber threats is also anticipated to evolve. Malicious actors may leverage AI to develop more advanced and harder-to-detect cyberattacks, such as AI-generated phishing scams and more complex forms of malware. This escalating arms race between defensive and offensive technologies reinforces the need for adaptive and continuously evolving cybersecurity strategies.

Human oversight will play a pivotal role in the efficacy and ethics of AI-powered cybersecurity measures. While AI can process and analyze vast amounts of data with unprecedented speed, human intelligence remains crucial for contextual understanding and ethical decision-making. Continuous evaluation and regulatory scrutiny are essential to ensure AI systems operate within ethical boundaries and remain aligned with the intended security objectives.

To successfully integrate AI with human intelligence in cybersecurity protocols, organizations should adopt a hybrid approach. This entails leveraging AI for its computational strengths while maintaining critical human intervention points for data interpretation and decision-making. Establishing clear protocols for human oversight, conducting regular AI audits, and fostering collaboration between AI experts and cybersecurity professionals are best practices that can enhance the efficacy and reliability of AI-driven security measures.

In this rapidly advancing field, balancing innovation with vigilance will be key to harnessing the full potential of generative AI in cybersecurity. By strategically integrating AI and human oversight, societies can aspire to fortify their defense mechanisms, staying one step ahead in an ever-evolving cyber threat landscape.